An EP featuring music collaboratively composed with Isomer between 2017 and 2018 is now available on a wide range of streaming sites. Here's the track list: Dark Halls (4:32) Bundles of …

Composing Music with Isomer

After graduating Eastman in 2002, I got involved with music informatics research and spent several years developing music search and discovery technologies. During that time, I was exposed to emerging …

How Does Isomer Work?

Isomer is a suite of software tools that produce abstracted representations of the characteristic trends expressed in existing musical models. The system then uses these observed trends to create …

How Can I Use Isomer?

Isomer's flexible architecture makes it ideal for a wide range of research, creative, and industry applications. The current application roadmap focuses on musical applications, however, there's …

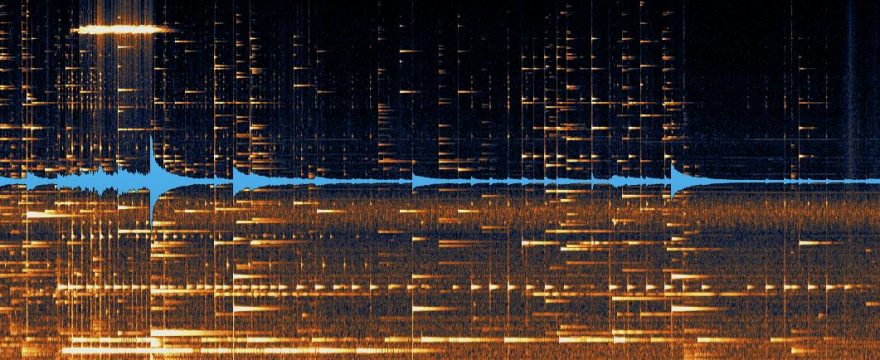

Representing Musical Models (the Isomer way)

Isomer is a suite of software that produces an abstracted representation of the characteristic trends present in musical language through the analysis of symbolic or audio input. Isomer uses this …

Continue Reading about Representing Musical Models (the Isomer way) →

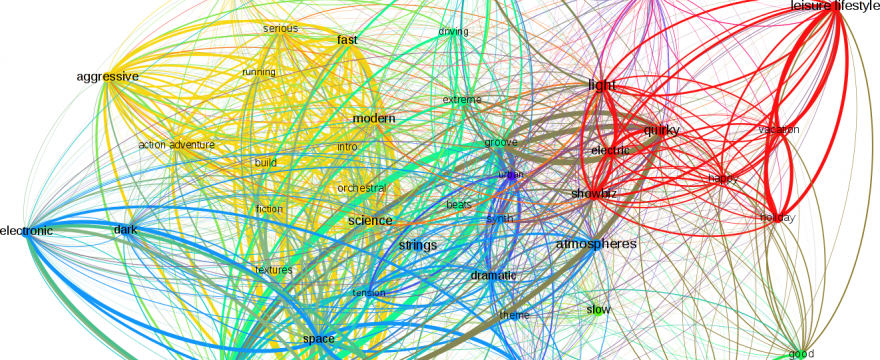

Automating Musical Descriptions: A Case Study

It's widely accepted that music elicits similar emotional responses from culturally connected groups of human listeners. Less clear is how various aspects of musical language contribute to these …

Continue Reading about Automating Musical Descriptions: A Case Study →